In a previous blog post I looked into converting a custom markup text format into "proper" PDF and epub documents. The format at the time was very simple and could not do even simple things like italic text. At the time it was ok, but as time went on it seemed a bit unsatisfactory.

Ergo, here is a sample input document:

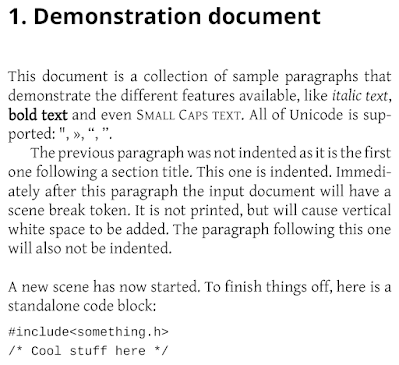

This is "Markdown-like" but specifically not Markdown because novel typesetting has requirements that can't easily be retrofit in Markdown. When processed this will yield the following output:

Links to generated documents: PDF, epub. The code can be found on Github.

A look in the code

An old saying goes that that the natural data structure for any problem is an array and if it is not, then change the problem so that it is. This turned out very much to be the case in this problem. The document is an array of variants (section, paragraph, scene change etc). Text is an array of words (split at whitespace) which get processed into output, which is an array of formatted lines. Each line is an array of formatted words.

For computing the global chapter justification and final PDF it turned out that we need to be able to render each word in its final formatted form, and also hyphenated sub-forms, in isolation. This means that the elementary data structure is this:

std::string text;

std::vector<HyphenPoint> hyphen_points;

std::vector<FormattingChange> format;

StyleStack start_style;

};

This is "pure data" and fully self-contained. The fields are obvious: text has the actual text in UTF-8. hyphen_points lists all points where the word can be hyphenated and how. For example if you split the word "monotonic" in the middle you'd need to add a hyphen to the output but if you split the hypothetical combination word "meta–avatar" in the middle you should not add a hyphen, because there is already an en-dash at the end. format contains all points within the word where styling changes (e.g. italic starts or ends). start_style is the only trickier one. It lists all styles (italic, bold, etc) that are "active" at the start of the word and the order in which they have been declared. Since formatting tags can't be nested, this is needed to compute and validate style changes within the word.

Given an array of these enriched words the code computes another array of all possible points where the text stream can be split, both within and between words. The output of this algorithm is then yet another array. It contains all the split points. With this the final output can be created fairly easily: each output line is the text between split points n and n+1.

The one major missing typographical feature missing is widow and orphan control. The code merely splits the page whenever it is full. Interestingly it turns out that doing this properly is done with the same algorithm as paragraph justification. The difference is that the penalty terms are things like "widow existence" and "adjacent page height imbalance".

But that, as they say, is another story. Which I have not written yet and might not do for a while because there are other fruit to fry.

No comments:

Post a Comment