In my day job I'm a consultant. Every now and then my customer changes. This means setting up a new development environment and all that. Recently I started working for a Very Big customer who have a Very Corporative network setup. Basically:

- All network traffic must go through a corporate HTTP proxy. This means absolutely everything. Not one bit gets routed outside it.

- Said proxy has its own SSL certificate that all clients must trust and use for all traffic. Yes, this is a man-in-the-middle attack, but a friendly one at that, so it's fine.

This seems like a simple enough problem to solve. Add the proxy to the system settings, import the certificate to the global cert store and be done with it.

As you could probably guess by the title, this is not the case. At all. The journey to get this working (which I still have not been able to do, just so you know) is a horrible tale of never ending misery, pain and despair. Everything about this is so incredibly broken and terrible that it makes a duct taped Gentoo install from 2004 look like the highest peak of usability ever to have graced us mere mortals with its presence.

The underlying issue

When web proxies originally came to being, people added support for them in the least invasive and most terrible way possible: using environment variables. Enter http_proxy, https_proxy and their kind. Then the whole Internet security thing happened and people realised that this was far too a convenient way to steal all your traffic. So programs stopped using those envvars.

Oh, I'm sorry, that's not how it went at all.

Some programs stopped using those envvars whereas other did not. New programs were written and they, too, either used those envvars or didn't, basically at random. Those that eschewed envvars had a problem because proxy support is important, so they did the expected thing: everyone and their dog invented their own way of specifying a proxy. Maybe they created a configuration file, maybe they hid the option somewhere deep in the guts of their GUI configuration menus. Maybe they added their own envvars. Who's to say what is the correct way?

This was, obviously, seen as a bad state of things so modern distros have a centralised proxy setting in their GUI configurator and now everyone uses that.

Trololololololooooo! Of course they don't. I mean, some do, others don't. There is no logic which do or don't. For example you might thing that GUI apps would obey the GUI option whereas command line programs would not but in reality it's a complete crapshoot. There is no way to tell. There does not even seem to be any consensus on what the value of said option string should be (as we shall see later).

Since things were not broken enough already, the same thing happened with SSL certificates. Many popular applications will not use the system's cert store at all. Instead they prefer to provide their own artisanal hand-crafted certificates because the ones provided by the operating system have cooties. The official reason is probably "security" because as we all know if someone has taken over your computer to the extent that they can insert malicious security certificates into root-controlled locations, sticking to your own hand-curated certificate set is enough to counter any other attacks they could possibly do.

What does all of this cause then?

Pain.

More specifically the kind of pain where you need to do the same change in a gazillion different places in different ways and hope you get it right. When you don't, anything can and will happen or not happen. By my rough estimate, in order to get a basic development environment running, I had to manually alter proxy and certificate settings in roughly ten different applications. Two of these were web browsers (actually six, because I tried regular, snap and flatpak versions of both) and let me tell you that googling how to add proxies and certificates to browsers so you could access the net is slightly complicated by the fact that until you get them absolutely correct you can't get to Google.

Apt obeys the proxy envvars but lately Canonical has started replacing application debs with Snaps and snapd obviously does not obey those envvars, because why would you. Ye olde google says that it should obey either /etc/environment or snap set system proxy.http=. Experimental results would seem to indicate that it does neither. Or maybe it does and there exists a second, even more secret set of config settings somewhere.

Adding a new certificate requires that it is in a specific DERP format as opposed to the R.E.M. format out there in the corner. Or maybe the other way around. In any case you have to a) know what format your cert blob is and b) manually convert it between the two using the openssl command line program. If you don't the importer script will just mock you for getting it wrong (and not telling you what the right thing would have been) instead of doing the conversion transparently (which it could do, since it is almost certainly using OpenSSL behind the scenes).

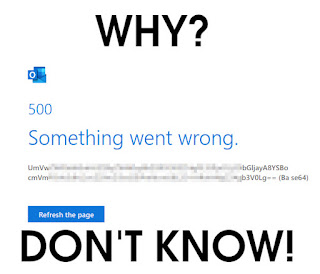

Even if every single option you can possibly imagine seems to be correct, 99% of the time Outlook webmail (as mandated by the customer) forces Firefox into an eternal login loop. The same settings work on a coworker's machine without issues.

Flatpak applications do not seem to inherit any network configuration settings from the host system. Chromium does not have a setting page for proxies (Firefox does) but instead has a button to launch the system proxy setting app, which does not launch the system proxy setting app. Instead it shows a page saying that the Flatpak version obeys system settings while not actually obeying said settings. If you try to be clever and start the Flatpak with a custom command, set proxy envvars and then start Chromium manually, you find that it just ignores the system settings it said it would obey and thus you can't actually tell it to use a custom proxy.

Chromium does have a way to import new root signatures but it then marks them as untrusted and refuses to use them. I could not find a menu option to change their state. So it would seem the browser has implemented a fairly complex set of functionality that can't be used for the very purpose it was created.The text format for the environment variables looks like https_proxy=http://my-proxy.corporation.com:80. You can also write this in the proxy configuration widget in system settings. This will cause some programs to completely fail. Some, like Chromium, fail silently whereas others, like fwupdmgr, fail with Could not determine address for server "http". If there is a correct format for this string, the entry widget does not validate it.

There were a bunch of other funnities like these but I have fortunately forgotten them. Some of the details above might also be slightly off because I have been battling with this thing for about a week already. Also, repeated bashes against the desk may have caused me head bairn damaeg.

How should things work instead?

There are two different kinds of programs. The first are those that only ever use their own certificates and do not provide any way to add new ones. These can keep on doing their own thing. For some use cases that is exactly what you want and doing anything else would be wrong. The second group does support new certificates. These should, in addition to their own way of adding new certificates, also use certificates that have been manually added to the system cert store as if they had been imported in the program itself.

There should be one, and only one, place for setting both certs and proxies. You should be able to open that widget, set the proxies and import your certificate and immediately after that every application should obey these new rules. If there is ever a case that an application does not use the new settings by default, it is always a bug in the application.

For certificates specifically the imported certificate should go to a separate group like "certificates manually added by the system administrator". In this way browsers and the like could use their own certificates and only bring in the ones manually added rather than the whole big ball of mud certificate clump from the system. There are valid reasons not to autoimport large amounts of certificates from the OS so any policy that would mandate that is DoA.

In this way the behaviour is the same, but the steps needed to make it happen are shorter, simpler, more usable, easier to document and there is only one of them. As an added bonus you can actually uninstall certificates and be fairly sure that copies of them don't linger in any of the tens of places they were shoved into.

Counters to potential arguments

In case this blog post gets linked to the usual discussion forums, there are a couple of kneejerk responses that people will post in the comments, typically without even reading the post. To save everyone time and effort, here are counterarguments to the most obvious issues raised.

"The problem is that the network architecture is wrong and stupid and needs to be changed"

Yes indeed. I will be more than happy to bring you in to meet the people in charge of this Very Big Corporation's IT systems so you can work full time on convincing them to change their entire network infrastructure. And after the 20+ years it'll take I shall be the first one in line to shake your hand and tell everyone that you were right.

"This guy is clearly an incompetent idiot."

Yes I am. I have gotten this comment every time my blog has been linked to news forums so let's just accept that as a given and move on.

"You have to do this because of security."

The most important thing in security is simplicity. Any security system that depends on human beings needing to perform needlessly complicated things is already broken. People are lazy, they will start working around things they consider wrong and stupid and in so doing undermine security way more than they would have done with a simpler system. In other words:

And finally

Don't even get me started on the VPN.

Great post, it makes me even happier that I am retiring.

ReplyDeleteOn the bright side, once you get it working, it should provide more consultation work with every upgrade going forward.

Ok, I just can't resist the temptation to add another question. Does it have to be full Linux, or would work if you use the Big Corporation's administered laptop (I guess they have Processes for setting up this stuff on Windows) for corporate stuff and guest VM or Linux subsystem for the part that absolutely needs Linux?

ReplyDeleteThat's roughly what I ended up doing. Virtualbox will only allow me to use half of the cores, though. There's also antivirus sw on the Windows image to further slow things down.

DeleteI'm a bit surprised that they even considered allowing connecting a computer that's not running their remote management software or even Corporate Approved Antivirus to their network. The core limitation sounds a lot like every time I've tried to use VirtualBox...

DeleteShitty devs make shitty options., Things are hard, choice creates options, more news at 11. Back to you in the studio.

ReplyDelete